Designing a Zero-to-One AI Tool for Oncologists

Translating LLM outputs into structured, clinically actionable workflows in a regulated oncology environment

Role

Scope

Context

Timeline

Senior AI Product Designer

UX Research, AI output structuring, Interaction Design, Visual Design, System thinking, Gouvernance

AI Startup | AVL Partner to pilot PoC in Netherlands Cancer Institute

6 months

The context

In oncology, when a new patient enters care, attending physicians must rapidly synthesise their full medical history, often scattered across dozens of unstructured documents.

To address this, we set out to build an AI-powered system leveraging large language models (LLMs) to extract, structure and present clinically relevant information as a usable consult preparation summary. This initiative was part of a broader exploration of how foundational models could responsible support clinicians in real-world, high-stakes environments.

At the time, there was no defined product. As the first designer, I helped shape the problem space: mapping the patient journey and identifying where structured AI outputs could meaningfully reduce cognitive load without compromising clinical judgement.

The core challenge

Preparing for a patient consult in oncology requires rapid synthesis of fragmented medical histories spread across multiple unstructured documents.

While LLMs can extract and summarize information, they introduce inherent risks:

Non-deterministic outputs

Hallucinations

Inconsistent structure

Limited transparency in uncertainty

The challenge was not designing a summary interface.

It was defining a structured interaction model for probabilistic AI outputs, one that supports fast clinical understanding while preserving traceability and human judgment.

This required balancing:

Speed vs. safety

Automation vs. clinician control

Feature ambition vs. implementation readiness

The goal was not to replace clinical reasoning, but to responsibly augment consult preparation within real-world constraints.

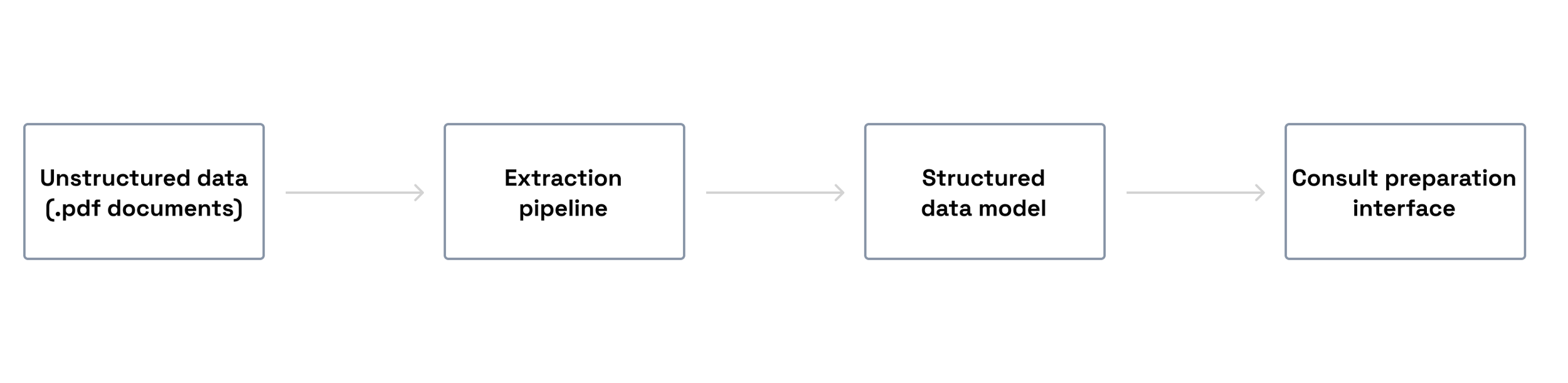

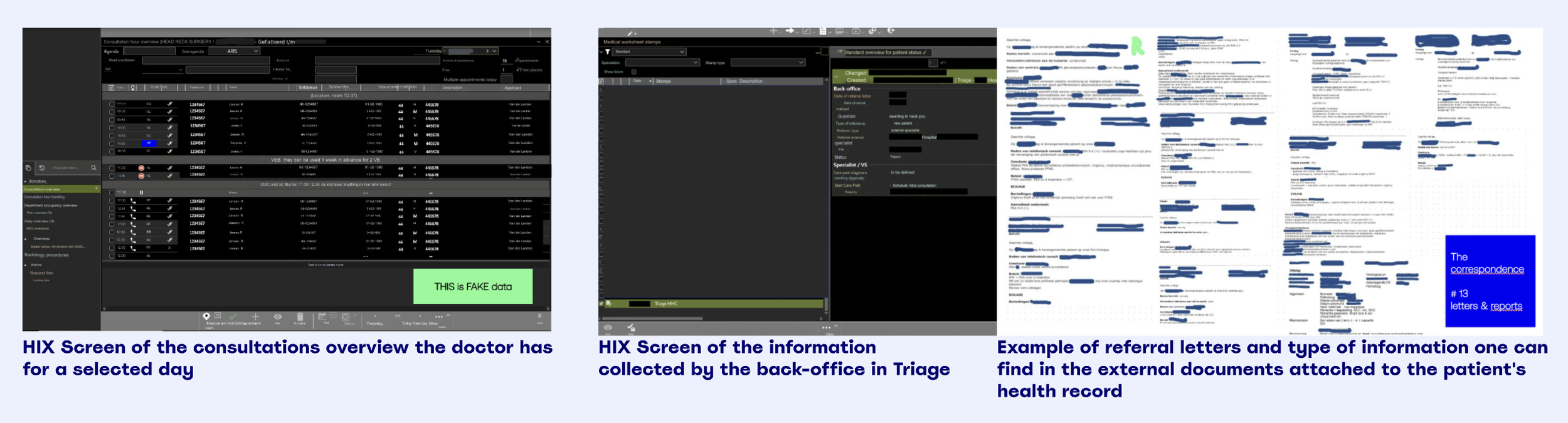

How preparing for a consult goes like today: EHR examples + documents attached to a patient

My role and scope

As the first and lead UX designer, in the beginning, I operated in an environment without formal product leadership, collaborating closely with:

A technical lead (engineering project lead)

An internal medical expert (former surgeon)

An external oncology subject matter expert (Head & Neck surgeon)

My role extended beyond experience design. I shaped product direction by:

Defining the initial patient summary structure before UI formalisation

Influencing what the AI model should extract based on clinical relevance

Leading and synthesizing 20+ cognitive walkthrough interviews with physicians

Driving scope and roadmap discussions under evolving AI capabilities

Prioritizing minimum viable clinical understanding over speculative AI features

Supporting implementation while laying foundations for scalable design governance

In the absence of formal product management, design became the stabilizing force aligning engineering feasibility, clinical needs, and delivery timelines.

Research & Structural Validation

To ground product decisions in clinical reality, I led 20+ cognitive walkthrough interviews with physicians across departments, including collaboration with external stakeholders at the Netherlands Cancer Institute.

These sessions focused on:

What clinicians need to understand first

How they mentally structure patient understanding

What errors would be unacceptable

Where AI-generated summaries could create overtrust

The key insight:

Clinicians do not need more information. They need structured clarity under time pressure.

This led to the definition of a minimal yet clinically complete patient summary structure that balanced readability with necessary depth.

That structure became the foundation of the PoC and continues to inform the production evolution.

“This could make even a bad doctor be a good doctor”

Surgeon from The Netherlands Cancer Institute during one of our interviews

Defining the Extraction Structure

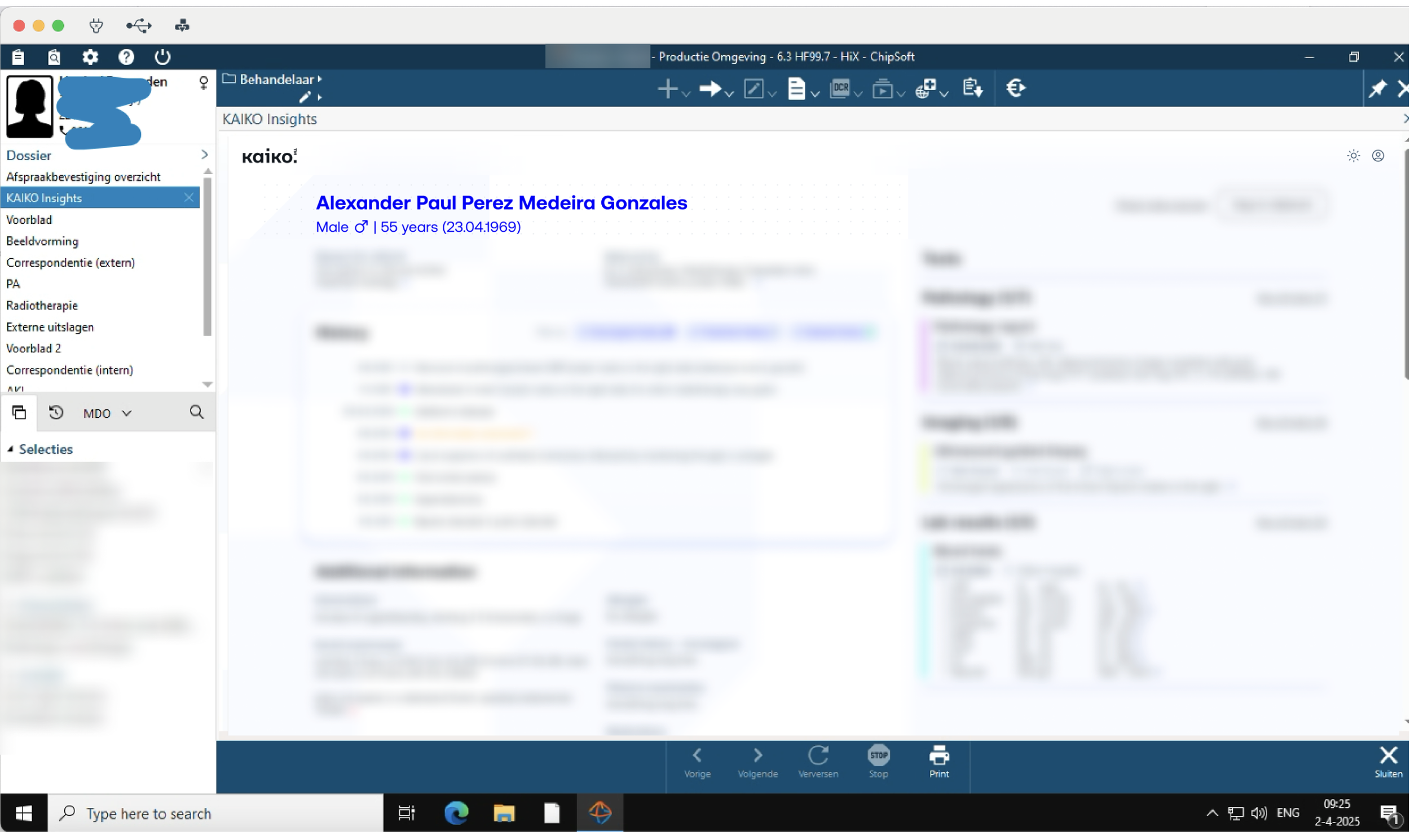

The final UI design for the PoC screen. Blurred for NDA purposes.

Before designing high-fidelity UI, I proposed an initial structural model for what the AI should extract and how information should be grouped.

This included:

Defining grouping logic for related clinical information

Establishing hierarchy for rapid cognitive scanning

Identifying minimum essential data required for consult readiness

Aligning extraction structure with real clinical workflows

The structure was refined weekly with internal and external medical experts.

Early explorations included advanced visualizations (e.g., 3D patient representations) and AI-generated recommendations. These were intentionally removed from the PoC due to safety considerations, pipeline maturity, and risk of cognitive overload.

The final system prioritised structural clarity over visual novelty.

Handling AI Constraints & Uncertainty

Because the system relied on document extraction rather than prediction:

Each extracted element was visually linked to its source document

Where document context was uncertain, warnings were introduced

Narrative free-text summaries were minimized in favor of structured sections

This approach preserved clinician agency and reduced the risk of overtrust.

Design decisions were made pragmatically within the constraints of extraction speed and pilot pipeline limitations.

Outcome & Impact

This initiative resulted in the company’s first operational AI proof of concept, deployed within a partner hospital’s EHR environment and piloted by 5 clinicians during real consult preparation.

Beyond the pilot, the defined patient summary structure and interaction model established a shared product direction, reduced delivery ambiguity, and became the structural foundation for subsequent AI initiatives.

Screen of PoC integration into the EHR. Blurred as it is subject of NDA

Reflection

The main risk throughout this project was overtrust in AI within high-stakes clinical environments. Even if clinicians express confidence, real-world time pressure can encourage cognitive shortcuts. This reinforced the need for traceability, visible uncertainty, and structural clarity over persuasive automation.

As additional AI proof-of-concepts emerged in radiology and pathology, a second challenge became evident: how to ensure structural coherence across domains. I worked to align interaction principles and patient information models across initiatives. The experience highlighted the importance of early governance and shared structural foundations when building AI systems in regulated contexts.